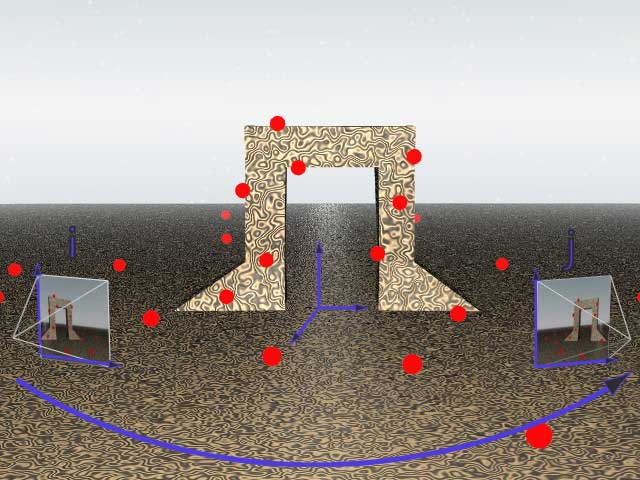

Match moving is primarily used to track the movements of a camera through a shot so that an identical virtual camera move can be produced in a 3D animation program. When new animated elements are composited back into the original live action shot they will appear in perfectly matched perspective and therefore appears seamless.

Match moving allows the insertion of computer graphics into live action footage with correct position, scale, orientation, and motion relative to photographed objects in the shot. The term is used loosely to refer to several ways of extracting motion information from a motion picture, particularly camera movement.

NEED FOR A MATCH MOVE

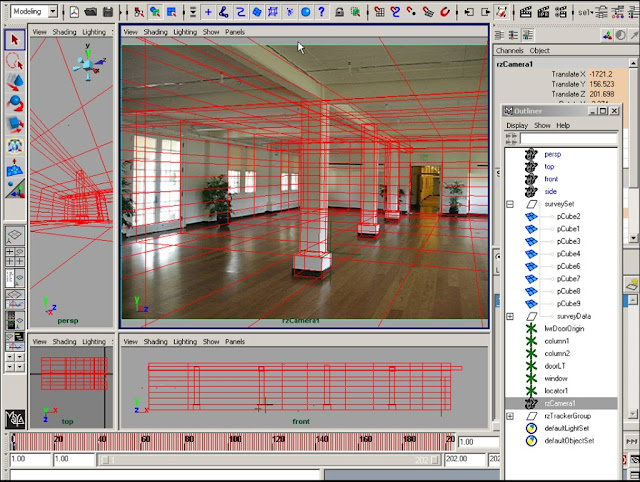

Whenever we are required to put a 3D object into a live action footage we also have to match the 3D camera with the live action camera. This is done so that 3D objects will have the same perspective rendering as the live action, and also to help 3D interact with the live action. On a locked shot we can just eyeball the 3D camera, by loading up the shot in any 3D software. Then the goal is to fudge the position and focal length that makes the grid lined up.

If there is any camera movement in a plate we have to somehow copy that exact camera move into 3D. And it’s pretty obvious that the real camera move is far too complex to be copied by hand. The only thing that can solve our problem is match-moving. Match moving is able to read a shot and extract the exact camera move in 3D. Then anything that we create in 3D can be shot in the exact same way.

PHOTOGRAMMETRY AND MATCH MOVE

When we hand an image to a computer, it has no 3 dimensional idea of what it is looking at. We might see a road that leads into the landscape and we perceive depth. But to the computer it’s just the bunch of pixels on a flat plane and it doesn’t understand anything 3 dimensional about it. In-fact we understand perspective because we know what the road is. We only perceive real depth because we have two eyes that are placed apart and that give us two angels on the same scene. When our brain measures how things are positioned differently seeing from each eye, we suddenly understands our surroundings in real 3D.

Match moving is a similar way for the computer to analyse the perspective change and extract a real 3 dimensional scene. Match moving does this by using a principal called photogrammetry, which means to extract 3D data from photographs. The idea is if we have two photographs of the same thing from different angels and point out the same exact feature from each angle, by using a tracking feature. Then when we project these imaginary lines out from the camera, there is mathematically only one point that can lock together. Then it’s possible to calculate not only the 3D coordinates of the points but more importantly the 3D coordinates of the camera.

2DIMENSIONAL TRACKING PROCESS

This concept involves identifying tracking features. A feature is a specific point in an image that a tracking algorithm can lock onto and follow through multiple frames. Often features are selected because they are bright or dark spots, edges or corners, depending on the particular tracking algorithm. What is important is that each feature represents a specific point on a surface of a real object. As a feature is tracked it becomes a series of two dimensional co-ordinates that represent the position of the feature across a series of frames. This series is referred to as track. Once tracks have been created they can be used immediately for 2d motion tracking or then be used to calculate the 3D information.

3DIMENSIONAL TRACKING PROCESS

This process attempts to drive the motion of the camera by solving the inverse projection of the 2D paths for the position of the camera. This process is referred to as a calibration. When a point on the surface of a three dimensional object is photographed its position in the 2D frame can be calculated by a 3D projection function. We can consider the camera to be an abstraction that holds all the parameters necessary to model a camera in a real or virtual word. Therefore a camera is a vector that includes as it elements the position of the camera its orientation, focal length and other possible parameters that defines how the camera focuses light onto the film plane. These parameters help in generating a huge bunch of points in a 3D environment, according to the position of the objects in the footage. The bunch of points is sometimes referred to as Point Cloud or Point Cluster.

0 comments:

Post a Comment